Last month at Networking Field Day (NFD10) Juniper presented their Junos Fusion solution which brings simplicity to the data center by giving you a single pane of glass for managing all the switches in the fabric and allows you to upgrade all the switches from a central interface.

With Junos Fusion, all the access switches (called Satellite devices) are managed from a single or a pair of aggregation devices. The access devices can be either EX 4300 or QFX 5100 series switches and run a Windriver Linux distribution, known as the Linux Forwarding Operating System (LFOS), which is decoupled from the Junos operating system running on the aggregation devices. The aggregation devices are the new QFX 10000 series and run classic Junos. Juniper uses LLDP between the aggregation and access devices for auto discovery/provisioning and 802.1br+ for configuring and monitoring the ports. They also use Netconf between the aggregation devices to sync the configurations.

The feature itself is not new, the Juniper MX edge routers have supported this feature for a while but Juniper just extended the support to their data center switches this year.

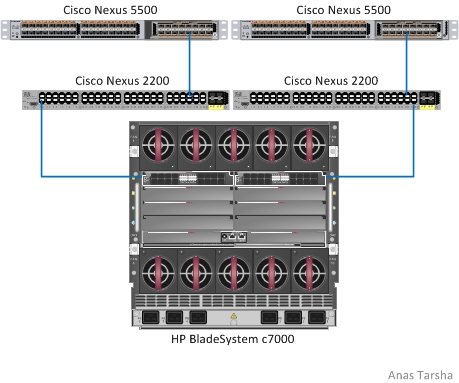

From operational perspective, Junos Fusion is very similar to Cisco Fabric Extender (FEX). They both make the fabric look from the outside as a big switch with a single IP address.

So if you are building a data center fabric, should you go with Junos Fusion or Cisco FEX? Well there are few things to consider when comparing the two architectures. Here is a few things to think about:

Port Density: how many server ports do you need? Both Junos Fusion and Cisco FEX architectures support today up to 64 access switches per fabric. The Nexus 2200 (FEX) has only 48 extended (server) ports which gives you a total of 3072 (64 x 48) ports per fabric while the QFX 5100-96S has 96 server ports which gives you a maximum of 6144 (64 x 96) ports per fabric so the Junos Fusion architecture clearly scales better when it comes to port density

Support For Local Switching & Other Features In the Access Layer: The Cisco Nexus 2200 has no brain and therefore has no support for local switching, VLAN tagging, or any other features you typically see in an access switch. It’s an “extender” and doesn’t not have ASICs to switch traffic. The QFX 5100/EX 4300 on the other hand are full blown switches with ASICs & intelligent software and support all the features mentioned above and more. L3 routing is not supported today on the QFX 5100/EX 4300 in Fusion mode, however Juniper stated that this feature is on the roadmap.

The need for local switching is a good debate to have. Some people argue that the Nexus 2200 is not a good fit for the data center because it cannot do local switching, however this is not a fair assessment in my opinion. Traffic patterns in the data center depend heavily on the type of workloads. Some workloads like Hadoop generate heavy east-to-west traffic within the same VLAN and in such case it’s recommended to keep all the server nodes on the same TOR to switch traffic locally and avoid congesting the uplinks. However many of the other workloads (Web applications namely) don’t generate heavy east-west traffic within the same VLAN.

The other thing to keep in mind is that with server virtualization the edge of the network is moving to the hypervisor and much of that intra-VLAN traffic is getting switched in kernel by the hypervisor without leaving the physical host therefore making the need for local switching unnecessary. Even inter-VLAN traffic can now get routed without leaving the physical host if you have a virtual distributed firewall / router.

Ivan Pepelnjak has a nice blog post on the need for distributed switching in the Nexus 2000.

Cost: This is where the Nexus 2200 really shines. Because it’s an extension and does not have full software/hardware capabilities, it’s very affordable and can reduce your CapEx substantially.

My Take:

Both the Junos Fusion and Cisco FEX architectures simplify managing data center networks. When comparing the two solutions, examine your workload requirements, determine how intelligent your TORs need to be, and from there you can decide which solution best works for you.

Here is the Junos Fusion presentation from NFD10:

Your Turn Now

What are you thoughts on this? Have you deployed either solution? I want to hear from you.

Disclaimer: I attended Networking Field Day 10 as a delegate. Vendors sponsoring the event indirectly covered my travel expenses, however I’m not required to write about their products or about the event. If I do write something, it’s because I want to express my opinions.

Share This: