I have been using the Cisco CSR 1000v as a default gateway in my home lab and I run an IPSec tunnel & LISP between it and my cloud provider (more on LISP in a separate post). The CSR 1000v runs on VMware ESXi, Microsoft Hyper-V, and Amazon Xen hypervisors but it can also run on a laptop/desktop hypervisor like VirtualBox or VMWare Fusion for testing or training purposes.

In this post I will show how to spin up a CSR 1000v instance in VMware Fusion for Mac.

Requirements:

1) I’m running VMWare Fusion 6.0 Professional but you can also run this virtual router on Fusion 4 or 5. I highly recommend using Fusion 5 Professional or 6 Professional if you want to create additional networks and assign the CSR 1000v interfaces to those networks. The feature to add additional networks (similar to Network Editor in VMWare Workstation) was added by VMWare in Fusion 5 Professional and it’s not available in the standard edition of Fusion. Alternatively if you don’t want to pay the extra bucks for the Professional edition, you can use this free tool from Nick Weaver to create the networks if you are running the standard edition. Nick’s tool isn’t the best but it does the job.

2) Go to Cisco and download the latest and greatest software version of the CSR 1000v. The CSR runs Cisco IOS XE and you need to download the OVA package for the deployment.

Installation:

The installation process is simple and quick:

1- Launch Fusion and go to File -> New from the top bar menu

2- Once the Installation wizard starts click on More options. Select Install an existing virtual machine and click Continue.

3- On the next screen click Choose File. Navigate to the folder containing the OVA package, select the file, and click Open and then Continue.

4- The next window will ask you to save the VM name, choose a name and click Save.

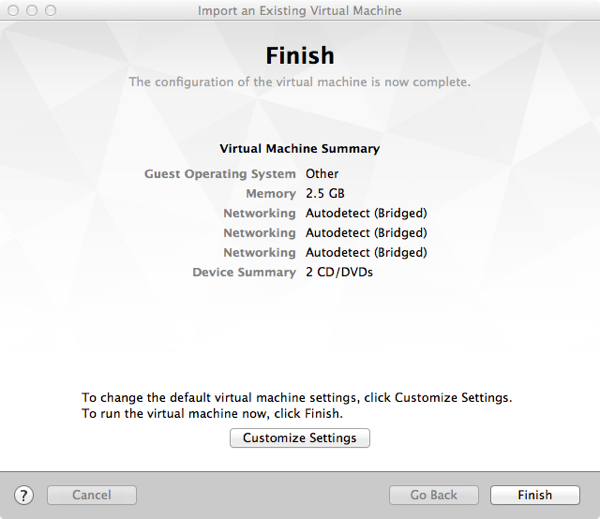

5- The final step of the wizard is to either customize or click fire up the VM. The CSR 1000v by default comes with three interfaces. If you need to add more interfaces click on Customize, otherwise click Finish.

6- Once you click Finish, the CSR 1000v will boot a couple of times and then you will be in traditional Cisco router User Mode.

Interfaces Management:

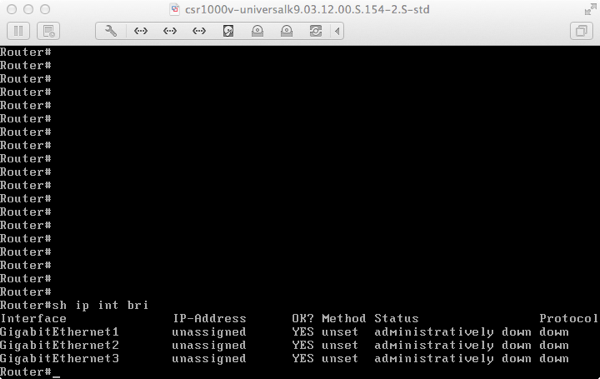

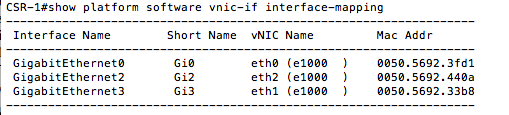

Enter Exec mode and enter “show ip int bri” to see the three interfaces.

By default interfaces will become part of the Ethernet or WiFi network (that’s done by Fusion) depending on which adapter is active during the installation and you can assign from here IP interfaces and default gateway.

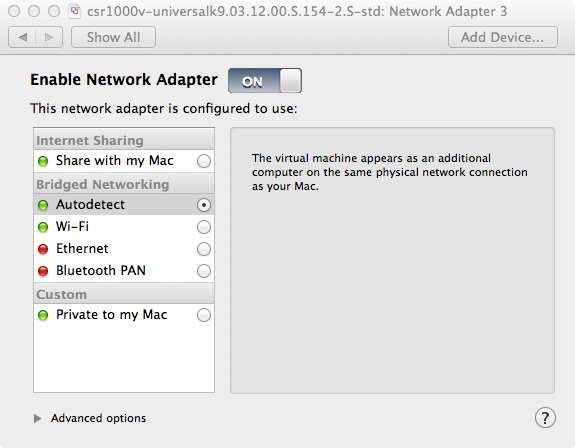

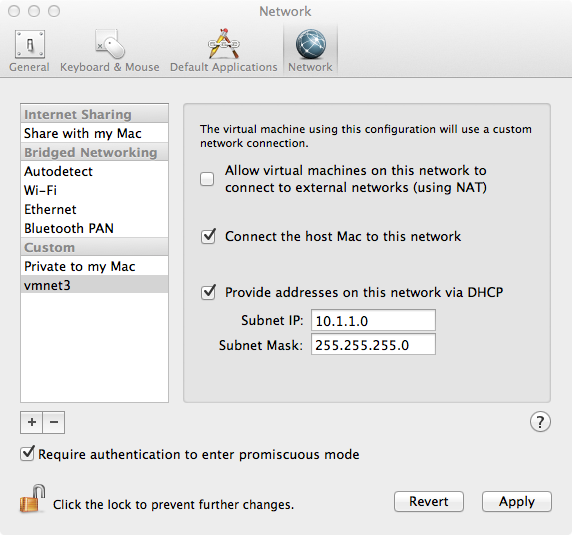

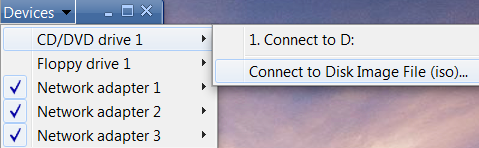

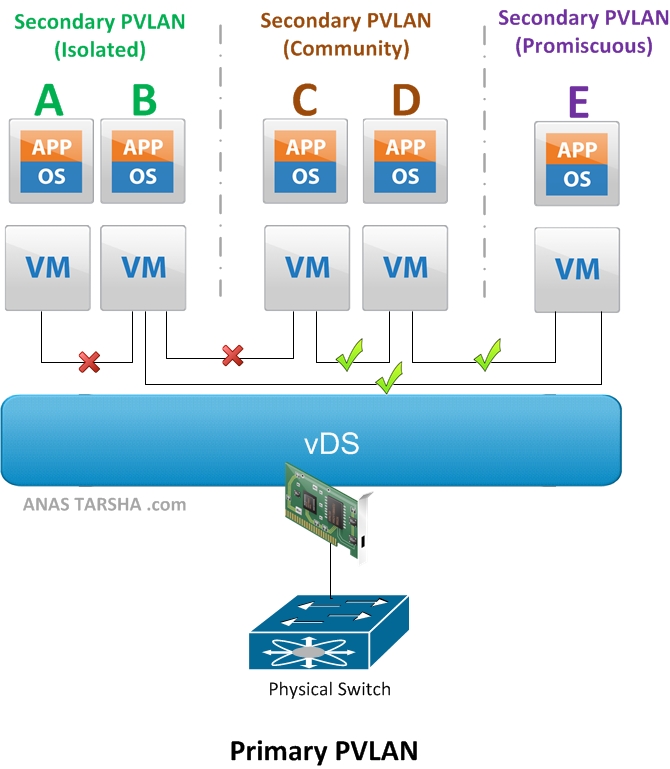

If you wish to put the interfaces on separate networks (VLANs), select the CSR VM and go to Virtual Machine -> Setting and choose the desired Network Adapter. You may also create custom networks by going to VMWare Fusion -> Preferences -> Network. From there add the + sign to add additional network and choose wether to enable DHCP or not.

Activating The License

In order to enable the full features of the CSR 1000v, you need to purchase a license from Cisco. If you want to try the full features before purchasing, Cisco offers 60 day free trial license. To activate the free trial license, go into the router configuration mode and enter: license boot level premium. You will be asked to boot the router after you enter the command.

Share This: