I have been looking for a way (other than VPN) to secure my internet connection when I’m working outside the office at Starbucks or attending a conference and using unsecure/open WiFi hotspot.

I stumbled upon Sidestep for Mac the other day and decided to give it a try. It has worked well so far and i really like it because it’s simple, lightweight, free, and best of all connects automatically if it detects I’m on unsecure wireless connection.

Sidestep uses SSL tunneling to connect to a proxy server (SSH server) and encrypts your data so that other people connected to the same network cannot intercept your unencrypted traffic.

I first used a server I had at home as my proxy gateway but the speed was not so great especially when I was streaming videos and decided to set up a proxy server on Amazon Web Services (AWS) instead. That obviously solved my problem.

In this post I’m going to show how easy it’s to set up a proxy server on AWS and secure your open wireless connection:

- Download and install Sidestep on your Mac

- Head over to AWS and sign up for an account if you don’t already have one. If you are a new customer you can use their Free Usage Tier which gives you the services you need for this setup for a year. If you are existing customer and no longer eligible for their free tier, you would have to pay for the service but it is actually pretty cheap and you can shut down the instance when you are not using it to even save more.

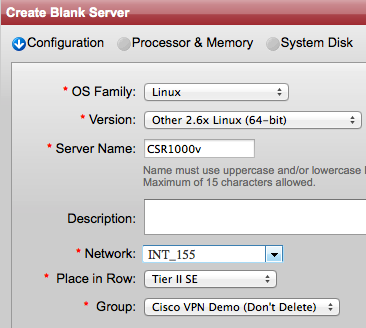

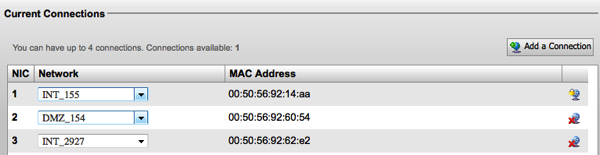

- Use the EC2 Launch Instance wizard and create an m3.medium Ubuntu instance. Make sure to allow SSH (TCP port 22) from Anywhere in your security group and download the public SSH key to your machine.

- Once the instance is ready, click on it and copy the public DNS name from the bottom pane.

- On your Mac, launch a terminal window, go to the folder where you stored the SSH key (cd <directory>)and execute the following command to make the SSH key readable only by you: chmod 400 <ssh_key.pem>

- Now it’s time to test the connection and connect to the server. Launch Sidestep on your Mac and go to Preferences. From the General tab make sure there that Reroute automatically when insecure and Run Sidestep on login are both checked.

- Click on the Proxy Server tab and enter your username (the default should be “ubuntu”) and the hostname (which is the DNS name of the instance)

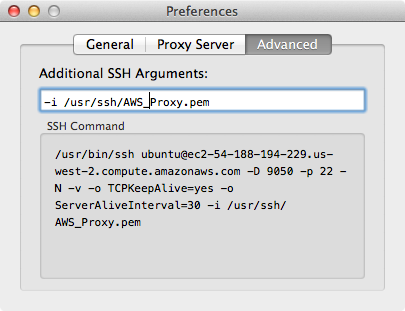

- Click on the Advanced tab and in the Additional SSH Arguments field enter the following argument, this will tell Sidestep to use the SSH key and where to find it: -i <path to your SSH key>/<SSH key>

- Go back to the Proxy Server tab and click on Test Connection to Server. At this point your should see a “Connection succeeded!” message.

- Close the Preferences window now and click on Connect to get connected. If you open your browser now and search for “what is my IP”, your IP address will be the same as the EC2 instance IP address. From here Sidestep will automatically terminate the connection if you switch to a secure connection.

Note: if you decide to shut down your EC2 instance when you are not using it, please be aware that its public IP address and DNS name will be different after you turn it back on. That means you will need to update the hostname field in Sidestep every time you stop/start your instance. One way to work around that is to assign an Elastic IP (static) to your instance or install Linux dynamic update client on your Ubuntu.

Comment if you find this post useful.

Share This: