When deploying blade servers it’s always recommended to use blade switches in the chassis for cabling reduction, improved performance and lower latency. However blade switches increase the complexity of the server access layer and introduce extra layer between the servers and the network. In this post I will go though few options for connecting the popular HP C-class BladeSystem to a Cisco unified fabric.

HP Virtual Connect Flex-10 Module

The Virtual Connect Flex-10 Module is a blade switch for the HP BladeSystem c7000 and c3000 enclosures. It reduces cabling uplinks to the fabric and offers oversubscription ratio of 2:1.

It has (16) 10GE internal connectors for downlinks and (8) 10Gb SFP+ for uplinks. This module however does not support FCoE so if you are planning on supporting Fibre Channel (FC) down the road you would need to add separate module to the chassis for storage. It also does not support QoS so that means you will need to carve up manually the bandwidth on the Flex-NICs exposed to the vSphere ESX kernel for vMotion, VM data, console, etc. This could be inefficient way of bandwidth assignment as the Flex-NIC would get only what’s assigned to it even if the 10G link is idle.

This module adds additional management point to the network as it has to be managed separately from the fabric (usually by the server team). The HP Virtual Connect Flex-10 module is around $12,000 list price.

HP 10GE Pass-Thru Module

The HP 10GE Pass-Thru module for the BladeSystem c7000 and c3000 enclosures acts like a hub and offers 1:1 oversubscription ratio. It has 16 connectors for downlinks and (16) 1/10GE uplink ports. It supports FCoE and the uplink ports accept SFP or SFP+ optics.

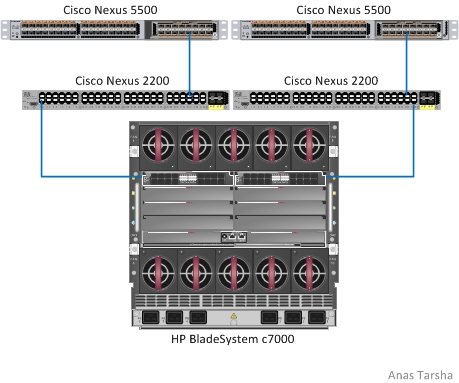

As shown the picture above this module can be connected to a Nexus Fabric Extender (FEX) such as the Nexus 2232PP which offers (32) 10GE ports for server connectivity or you can connect the module to another FEX with support for only 1GE downlinks if your server do not need the extra bandwidth. This solution is more attractive than the first option of using Virtual Connect Flex-10 module because it’s pass-thru and supports FCoE so you would not need another module for storage. And because it’s a pass-through it wouldn’t act like a “man in the middle” between the fabric and the blade servers.

Finally with this solution you have the option of using VM-FEX technology on the Nexus 5500 since both the HP pass-thru module and the Nexus 2200 FEX are transparent to the Nexus 5500. This module is around $5000 list price.

Cisco Fabric Extender (FEX) For HP BladeSystem

The Cisco B22 FEX was designed specifically to support the HP BladeSystem c7000 and c3000 enclosures. Similar to the Cisco Nexus 2200 it works like a remote card and is managed from the parent Nexus 5500 eliminating multiple provisioning and testing points. This FEX has 16 internal connectors for downlinks and (8) 10GE uplink ports. It does support FCoE and the supported features on it are on par with the Nexus 2200.

By far this is the most attractive solution for connecting HP BladeSystem to a Cisco fabric. With this solution you need to manage only the Nexus 5500 switches and you have support for FCoE, VM-FEX, and other NX-OS features. This B22 FEX is sold by HP (not Cisco) and its priced around $10,000 list price. The Nexus 5500 supports up to 24 fabric extenders in Layer 2 mode and up to 16 fabric extenders in Layer 3 mode.

Share This:

Nice post.

BTW. Do you know if the B22HP supports NPV up to the VM level ? I am planning to run the N5K in NPV mode (so the B22HP runs also in NPV mode). Do you know if the physical ports towards the ESX server will also be capable of doing NPV (multiple flogis from different VMs?)

BTW, you are using the same theme as my blog 🙂