Private VLAN (PVLAN) is a security feature which has been available for quite some time on most modern switches. It adds a layer of security and enables network admins to restrict communication between servers in the same network segment (VLAN). So for example let’s say you have an Email and Web servers in the DMZ in the same VLAN and you don’t want them to communicate with each other but still want each server to communicate with the outside world. Obviously one way to prevent the servers from talking directly to each other is to place each server in a separate VLAN and apply ACLs on the firewall/router preventing communication between the two VLANs. This solution though requires using multiple VLANs and IP subnets. It also requires you to re-IP the servers in an existing environment. But what if you are running out of VLANs or IP subnets and/or re-IPing is too disruptive? Well then you can use PVLAN instead.

With PVLAN you can provide network isolation between servers or VMs which are in the same VLAN without introducing any additional VLANs or having to use MAC access control on the switch itself.

While you can configure PVLAN on any modern physical switch, this post will focus on deploying PVLAN on a virtual distributed switch in a VMware vSphere environment.

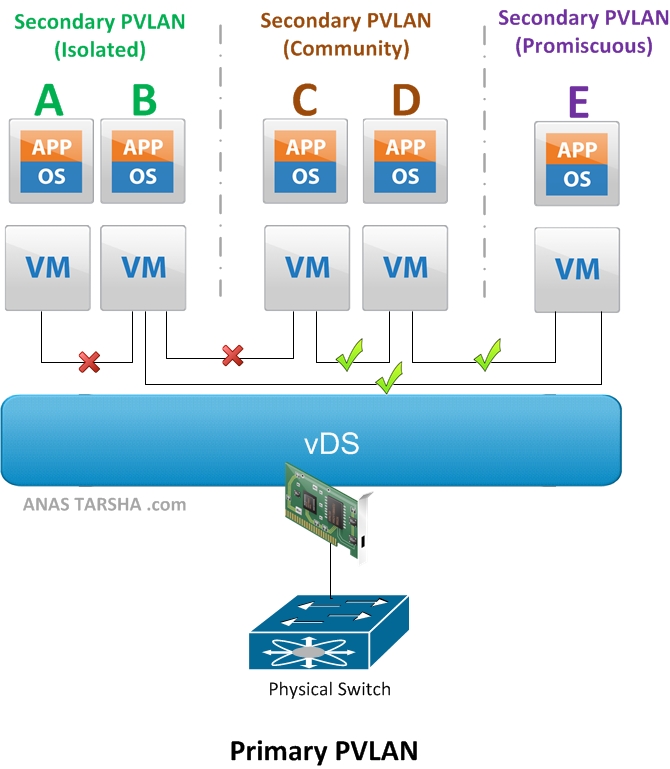

But first let me explain briefly how PVLAN works. The basic concept behind Private VLAN (PVLAN) is to divide up the existing VLAN (now referred to as Primary PVLAN) into multiple segments , called secondary PVLANs. Each Secondary PVLAN type then can be one of the following:

- Promiscuous: VMs in this PVLAN can talk to any other VMs in the same Promiscuous PVLAN or any other secondary PVLANs. On the diagram above, VM E can communicate with A, B, C, and D.

- Community: VMs in this secondary PVLAN can communicate with any VM in the same Community PVLAN and it can communicate with the Promiscuous PVLAN as explained above. However VMs in this PVLAN cannot talk to the Isolated PVLAN. So on the diagram, VM C and D can communicate with each other and communicate also with E.

- Isolated: A VM in this secondary PVLAN cannot communicate with any VM in the same Isolated PVLAN nor with any VM in the Community PVLAN. It can only communicate with the Promiscuous PVLAN. So looking at the diagram again, VM A and B cannot communicate with each other nor with C or D but can communicate with E.

There are few things you need to be aware of when deploying PVLAN in a VMware vSphere environment,:

- PVLAN is supported only on distributed virtual switches with Enterprise Plus license. PVLAN is not supported on a standard vSwitch.

- PVLAN is supported on vDS in vSphere 4.0 or later; or on Cisco Nexus 1000v version 1.2 or later.

- All traffic between VMs in the same PVLAN on different ESXi hosts need to traverse the upstream physical switch. So the upstream physical switch must be PVLAN-aware and configured accordingly. Note that this required only if you are deploying PVLAN on s vSphere vDS since VMware applies PVLAN enforcement at the destination while Cisco Nexus 1000v applies enforcement at the source and therefore allowing PVLAN support without upstream switch awareness.

Share This: